Customize LLMs with your data

7. 1. 2026

Training LLM base model from the scratch is affordable only for biggest companies, the costs count in millions of dollars. It requires very advanced skills, a lots of data and hardware. There are techniques.

There are affordable techniques which can make possible to fine-tune, customize base LLM models with your custom specific needs based on yours custom data.

The precondition is to use one of the clouds e.g. OCI from Oracle, where these base model are available with fine-tune workflows and features, which we will describe.

These are the most common techniques to use LLMs with your custom data :

- In-context learning / few shot prompting

- Fine tunning a pretrained model

- Retrieval Augmented Generation (RAG)

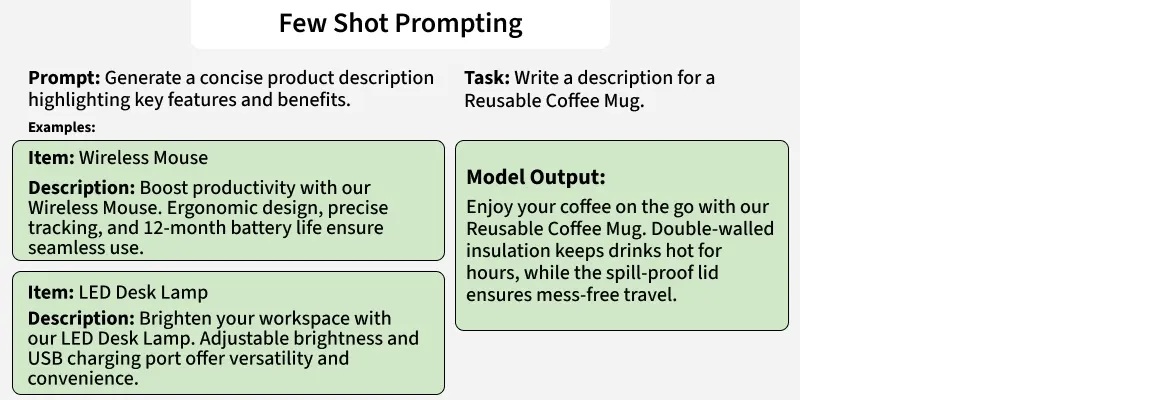

In-context learning / few shot prompting

User with the examples and demonstration teaches the model how to execute specific tasks. Popular methods are Chain of thought prompting. The limitation is the model context length but it should be the first step to perform when customizing LLM with your data.

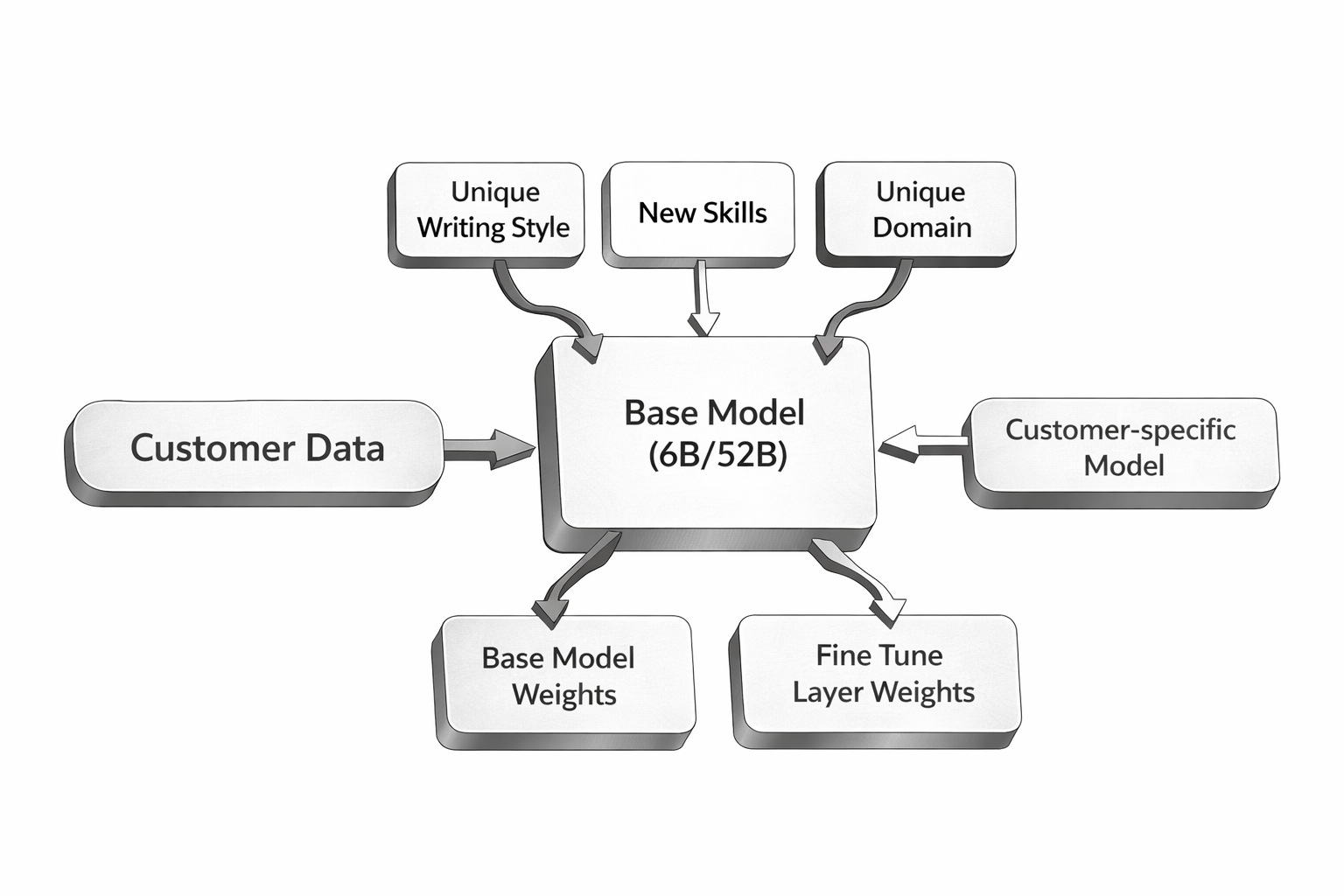

Fine-tunning a pretrained model

It is a optimizing model on a smaller domain data-set. It is used when the pretrained model does not perform as expected on the specific data domain and we want to teach the model something specific new. We can adapt specific tone and style and learn the customer, user preferences.

The benefits are :

- improve model performance on specific tasks

- it is more effective than the few-shot prompting in improving the model performance on specific domain data

- by customizing the model to the domain specific data /adapting the fine tune layer weights / it can better understand and generate better responses7

- improving the model efficiency

- reducing the number of tokens needed to perform the task

- it can compress the size of the model for the required tasks – ecological

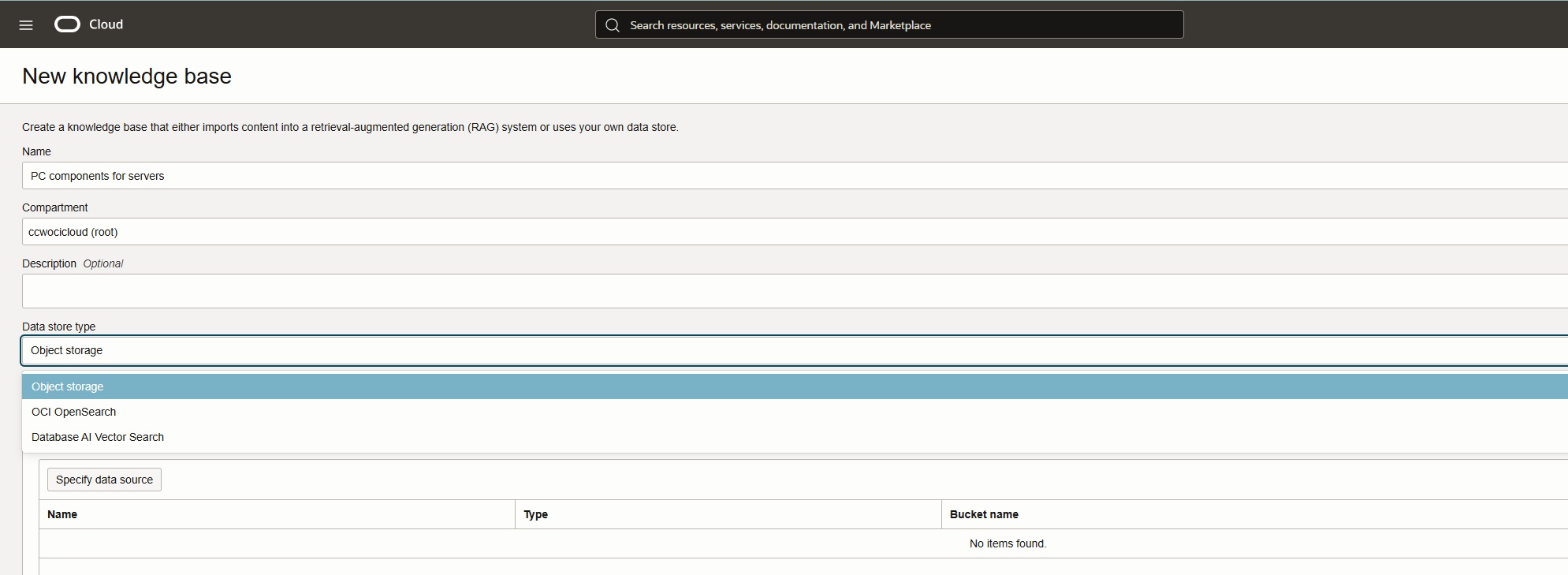

Retrieval Augmented Generation (RAG)

LLM is able to query knowledge bases /databases, wikis, vector databases and can return grounded responses. It does not require custom models. It is a method or generating text using additional information fetched from external source.

The external source can be :

- documents of certain formats such as txt, pdf stored in OCI object storage

- Database AI Vector Search

- OCI OpenSearch

There is a science about splitting methods and chunking, overlaping of the textual information for the document loaders and embedding approach.

We will discuss each of these ways to use your custom data for the LLM more in detail in future blogs.

There is a way how to use your custom data with LLM without compromising security in only your tenants and there are methods from simple to complex one how to either fine-tunne or prompt or point hosted LLMs clusters with your custom data.

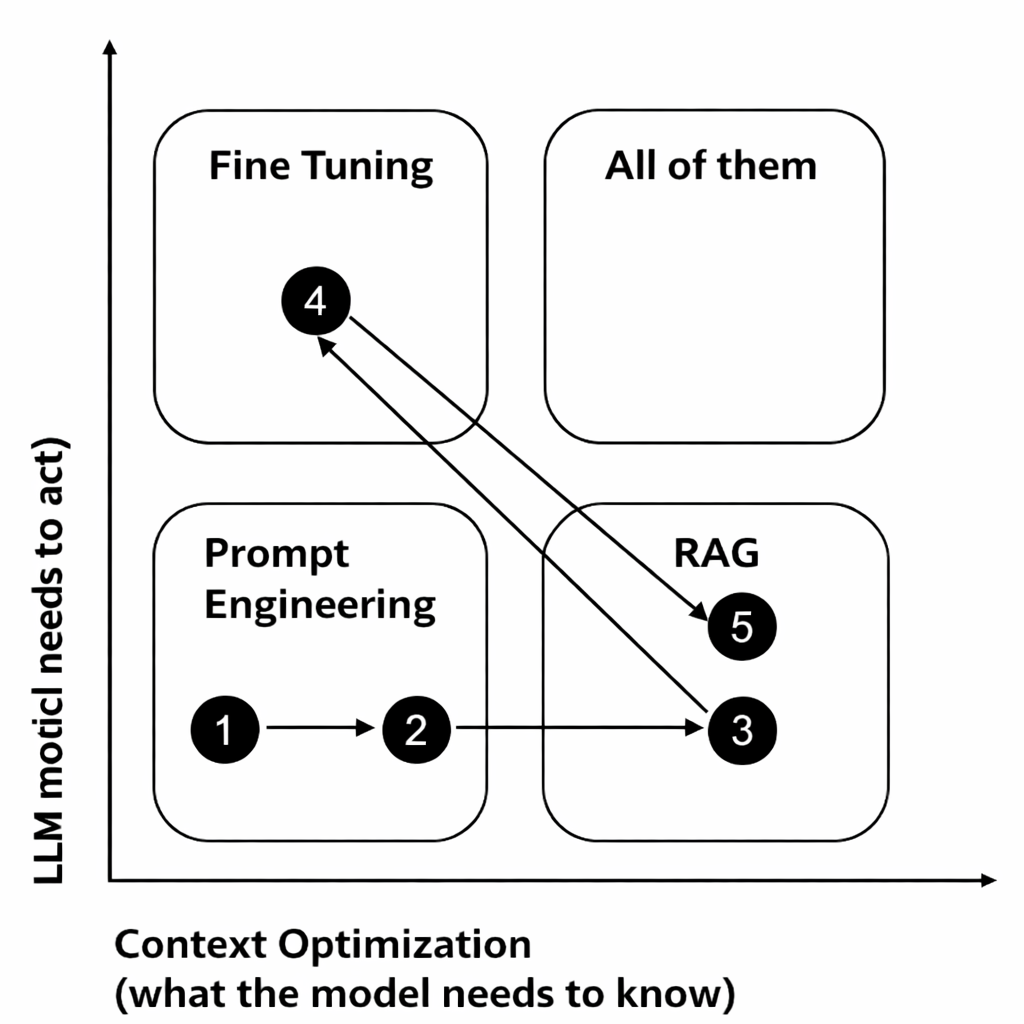

Step 1 try simple prompt

Step 2 try few shot prompting

Step 3 add RAG

Step 4 fine-tune the model

Step 5 optimize the retrieval on fine-tuned model

The enterprises can improve their efficiency by leveraging LLM with their custom data. If they choose OCI cloud and its feature they do not compromise security, privacy and still have the benefits of LLM and genAI. It can rapdily improve their work, services by having accurate fast information such as about products, features, in pharmacy about details important for patients.

Take the action now and contact us we will prepare you PoC and get you tailored best fit genAI solution LLM with your custom data.

Back to Blog